Tim Jeanes - TechEd 2009 - Day 2

Coincidentally in Berlin for the 20th anniversary of the fall of the Berlin wall.

20 years ago today a bunch of us got away without doing our German homework because our teacher was in such a good mood.

WIA203 - Streaming With IIS and Windows Media Services

IIS and WMS are two quite separate products, and which you use depends largely on your specific requirements and your available architecture.

WMS2008 sits best on its own server, separate from the server holding the original content. It then does all its own smart caching, automatically dumping the less-viewed content from the cache, and preserving the media that is more on demand. A raft of recent improvements mean it can handle more than twice as many simultaneous connections as could WMS2003.

On the other hand, if you're just delivering media from a web server, IIS Media Services may well be enough. It's a freely-available downloadable add-on to IIS that gives a bunch of features to improve media delivery.

It has some nice settings to give more efficient usage of the available bandwidth. Typically users only watch 20% of the video media that they actually download, so the other 80% is wasted bandwidth that the media provider still has to pay for. You can configure IIS to treat media and other data files differently dependent on their file type. Typically you'd set it to download as fast as possible for the first 5-20 seconds, then drop to a proportion of the required bit rate for the rest of the video. This gives a quick spike on bandwidth initially followed by a constant rate of just enough to ensure the user doesn't experience any delays in the media they're viewing.

If you need to control how and what the end user watches (for example, they may need to be forced to watch an advert before they can see the main content), you can control how they can stream and download the content. In the playlist you define on the server you can enable or disable the user's ability to skip videos or to seek within them. The URLs of the actual videos being sent are automatically obfuscated to ensure that the URL of the main content isn't guessable by the end user.

Smooth streaming is now supported. This monitors the user's bandwidth and adapts the stream quality in almost-real time. This is achieved by splitting the original media into many 2-4 second long chunks and encoding each at several different qualities. IIS then delivers each chunk in succession, switching between qualities as the bandwidth allows. As it's monitored continuously, if the user experiences a temporary glitch, their video returns to its better quality within a few moments.

Encoding at many bitrates is very CPU intensive, so doing this live is much harder work. Currently Microsoft has no offering that can manage this in real-time, so you'd need some third party hardware to do the hard work.

DEV317 - Agile Patterns: Agile Estimation

I suck at estimating timescales, and Getting Better At It has been on my list of tasks for the next period on every performance review I've had for the past 11 years. I'm glad to hear that I'm not alone - this was a very well-attended session where we commiserated together about how estimates (which are by definition based on incomplete information) becomes a hard deadline.

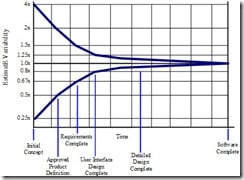

A key concept is the Cone Of Uncertainty. This measures how inaccurate your estimates are as time goes by.

A couple of points to note are that initial project estimates, based on the loosest information, are often out by a factor of 4 - either too long or too short; even when you have a clear idea of what the customer wants, estimates are still out by a factor of 2. Also notably, we can't guess with 100% accuracy when the software will be delivered until it's totally complete. Asked for a show of hands, the vast majority of the room said they've woken in the morning expecting to release a product today, end then haven't: even on the last day, we can't guess how long we've got left.

As we can't avoid this, it's better to be honest about it and work with it.

User stories

The customer specifies a list of stories - items that must be in the product.

Planning poker

From user stories, we make estimates of difficulty. This is to do with priorities - not time estimation. The estimates should be based on difficulty. Planning poker cards represent order of magnitude compared with baseline. Each person places their estimates simultaneously. Disagreements lead to discussions.

Take a baseline: a task you know well (such as a login page), then compare the complexity of each other item with this (this is twice as hard as that, etc.).

Story points

Break down stories into smaller pieces (that will later become your individual work items). Give each a number of story points: a number of units of relative size - multiples of say a notional hour or day, depending on the scale of the project. These aren't still really your time estimates as you don't really know yet how quickly you're going to work through them.

Play planning poker again to decide the number of story points for each item.

Product backlog

The list of work items becomes your backlog, each with an estimate attached to it. At this point you meet with the customer to prioritise the items in the backlog.

Velocity

Developers commit to a number of story points for the first sprint. At the end of the first sprint, the number of completed story points is your velocity.

TFS has some plugins that help to monitor and calculate this.

Re-estimation

After each sprint, the customer may add more stories to the backlog, and can re-prioritise the backlog. The developers may also add bugs to the backlog.

Each sprint gives you increasingly accurate predictions of future delivery.

DEV303 - Source Code Management with Microsoft Visual Studio 2010

Branching has been improved: it's now a more first-class part of TFS. Branches have permissions associated with them to allow or prevent certain users from creating or merging branches. It's a faster process now as no files have to be checked out (or even copied to your local machine) to create a branch in TFS.

You can create private branches by branching code and setting permissions on the new branch. Also, there's a graphical tool that shows how branches have been created and how they relate to one another. This is interactive, so branches can be created or merged from here.

When viewing the history of a file or project, changesets that were branches can be drilled into to show the changes that happened in the main branch, prior to it being branched into your project.

Changesets can be tracked visually through branches and merges - you can show where a change has been migrated between branches - either on the hierarchical diagram of changesets or on a timeline.

It was always a pain to merge changes where there are conflicting file renames. Fortunately this has been significantly cleaned up. The conflict is now correctly detected and you're told the original name of the file as well as the two conflicting new names.

Similar fixes have been implemented for the problem areas of moving files, making edits within renamed files, renaming folders, etc.

These version model changes make it a whole lot clearer what's going on if you view the history for a file that's been renamed - even if it's renamed to the same name as a file that was previously deleted. If you're using VS2005/2008 with TFS2010, you'll need a patch to ensure this works.

Rollbacks are now a proper feature - you don't have to resort to the command line power tool to do these. Also they now properly rollback merges (previously it would forget that the merge now hadn't taken place, so re-merging would be very difficult).

A single TFS server can now have multiple Team Project Collections. These are sets of projects with their own permissions, meaning that different teams can use the same TFS installation without access to one another's projects.

WIA403 - Tips and Tricks for Building High Performance Web Applications and Sites

This was a fast-paced session with lots of quick examples. I've not listed them all, but a few of them are here:

Simplifying the CSS can improve the performance of the browser. Basically speaking, the simpler the CSS rule, the more performant it will be. Also, using "ul > li" to specify immediate children is much more efficient than catching all descendents with "ul li".

Javascript performance can be improved by making sure you use variables that are as local as possible. Similarly, the more local the property on an object (i.e. on the object itself or on its prototype), the quicker it can be accessed.

A powerful feature of javascript is that it can evaluate and execute code in strings at runtime. However, this can be very slow. It's often used to run code with setTimeout - it's much better to use an anonymous function instead.

Getters and setters for properties are generally good programming practice. However, as javascript isn't compiled, the slight overhead of traversing the setter/ getter method can double the time taken to access the property.

The length property on a string or array is not fast: it has to count all the items in it. Thus saying for (var i = 0; i < myArray.length; i++) is very inefficient. Caching the length in a variable makes it faster. Or if you're iterating over DOM elements, you can use the firstChild and nextSibling properties instead: for (var el = this.firstChild; el != null; el = el.nextSibling)

Having a lot of cases in a switch statement is also slow: each case has to be checked in turn. A sneaky trick that can be employed is to build an array of functions instead, and just call the appropriate one. Obviously this doesn't apply in every situation.

Rather than having many small images on your page, it's better to have one large image and specify offsets for each one you want to show. SpriteMe is a handy tool that will make this mosaic for you: it scans your page for images and then glues them all together.

Doloto is a tool that monitors your javascript and tracks which functions are called. It can then generate javascript library files that are dynamically loaded in the page as they are needed. It ensures that the most common functions are available immediately and others are loaded later. A quick demo on Google maps showed that it could reduce its bandwidth spent on retrieving javascript files by 89%. Impressive stuff!

Microsoft Expression SuperPreview can render a page as it would appear in different versions of different browsers (Firefox, IE6, 7, and 8). It will show the results side-by-side (or even superimposed on one another) and even spot the differences for you (and highlight them).

WIA305 - What's New in ASP.NET MVC

A new feature is to be able to compose a view out of multiple calls to actions. This appears as <% Html.RenderAction() %>. This is a little smarter than Html.RenderPartial as you can perform any necessary business logic where it belongs in the correct controller instead of having to shoe-horn it in elsewhere.

Areas allow you to group parts of your application into logical groups. It behaves like a folder in your project, with subfolders for models, views and controllers. There's also an AreaRegistration class that registers routes for the Area. Global.asax has to call AreaRegistration.RegisterAllAreas to activate these. By default, the area appears at the top level of its views' URLs.

An exception is thrown at runtime if Areas contain controllers with the same name. This can be circumvented by specifying which namespace should be used for the controller, when you register the route.

Working with ASP.NET MVC 1, I've felt frustrated with the validation. A new model allows you to specify your validation once and have it applied to each layer. Your validation rules can be specified by using Data Annotation attributes, or in an XML file, or elsewhere if you write your own provider. To enable client-side validation you only need to include a couple of Microsoft javascript libraries and add the helper method <% Html.EnableClientValidation(); %> to your page. If you invent your own validation rules, you'll also have to write your own javascript version of that validation logic - the built in framework passes the rules as JSON that you can intercept on the client.

There are some new helper methods - Html.Display, Html.DisplayFor, Html.DisplayForModel, HtmlEditor, Html.EditorFor and Html.EditorForModel. Given a model, these display read-only or input field versions of all the fields on the model. You can define your own templates for these - either by type (to implement your own date picker, for example), or by giving a name of a partial view that renders an editor for the whole model. This respects inheritance too: if no template has been defined for Employee, it will fall back to the template defined for Person.

A nice little tweak is that by default, JsonResult now won't allow HTTP GETs. This dodges a cross-site scripting vulnerability, though you can override it if you really want.

DP

Related Posts

Got a project? Let's work together

Compsoft is a remote working company except for Thursdays when we work together from the Alton Maltings in Alton, Hampshire (UK). Our registered address is detailed below. We'd be delighted to have either a virtual chat with you or to make arrangements to meet in person.